There has been no great theoretical revolution in neuroscience. But that does not mean that no revolution will ever come. Neuroscience is still young

It’s a curious time to be a neuroscientist. The science of brain and behaviour is everywhere: endless books, documentaries, newspaper articles and conferences report new findings aplenty.

The recognition by the general public that the brain deserves serious attention is gratifying. Much of this interest derives from worries about maintaining brain health. Disorders of brain and behaviour (from anxiety and depression to brain tumours and Alzheimer’s disease) come with enormous costs to both individuals and health systems. Consequently, many private and public agencies support wonderful research in neuroscience. The Wellcome Trust, for example, funds a vast and far-reaching programme extending from studying individual molecules all the way to imaging the working brain. In the US, both the National Institute of Mental Health and the Defense Advanced Research Projects Agency (Darpa) support a large neuro-research programme – partly driven in the latter’s case by the desperate need for viable treatments for brain trauma deriving from blast injuries in active service personnel.

Philanthropy is also active: my own institution, Trinity College Dublin, recently received a joint endowment with the University of California, San Francisco of €175 million (£134 million) for work on brain health – the single largest endowment in our history.

And yet there are misgivings. The deep answers to the problems that impact on public health and well-being are not coming quickly enough. The hundred or so failed drug trials for Alzheimer’s disease have come at a cost reckoned in the billions; these are huge sums for any pharmaceutical company to absorb, and many have now written off research in brain diseases as too complex and too costly to sustain – blocking off one potential career destination for neuroscience graduates in the process.

Answers to big basic questions also seem a long way off. Even if this trend is now in decline, there have historically been too many papers reporting results along the lines of “brain area x does trivial function y”. The brain is, by definition, more complex than our current models of it, and it is only by embracing that complexity that we will be able to address questions such as: How can a brain be conscious? How can a brain experience diffidence or embarrassment, or reason in a moral fashion – and be simultaneously aware that it is so doing? How can a brain play rugby? Should a brain play rugby?

A few simple principles aside, there has been no great theoretical revolution in neuroscience comparable to those precipitated in other disciplines by Darwin, Newton or Crick and Watson. But that does not mean that no revolution will ever come. Neuroscience is still a young discipline, reflected by the fact that many undergraduate programmes still rely on matrix arrangements between multiple home departments (chiefly psychology, physiology and biochemistry).

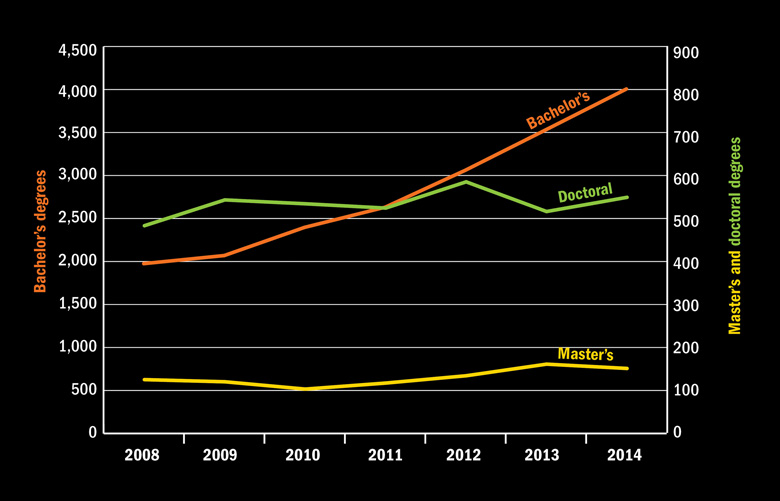

Number of neuroscience degrees conferred in the US

Source: US National Center for Education Statistics

Meanwhile, recent controversies over the replicability and reliability of research studies have been healthy, as they expose limits to knowledge. Understanding has been boosted of the dangers of basing conclusions on experiments that lack sufficient statistical power because of, for instance, low numbers of research participants or the retrofitting of hypotheses in light of results.

Other anxieties revolve around definitional issues: where does neuroscience stop and psychology or molecular biology start? But really, nobody should care too deeply about such questions: there are no knowledge silos in nature, and man-made silos aren’t useful. Knowledge blending is the game: it’s good to know something about the engine, the engineering principles and the nuts and bolts of the car you drive: not just the dynamic relationships between the steering wheel, accelerator, brake and petrol gauge. To take one example, there has been great mutual enrichment between socio-psychological theories concerned with stereotyping and those concerned with the brain’s mentalising network (activated when we attempt to understand agency in others). It turns out that brain regions involved in disgust are activated when we make judgements about members of despised out-groups. This is an important finding, integrating psychological processes involved in stereotyping into more general biological processes concerned with cleanliness and self-other differentiation.

Yet further anxiety is generated by neuroscience’s encroachment into public policy. We see the almost obligatory “neuro” prefix attached to concepts from ethics to politics, leadership, marketing and beyond. No wonder the great “neurobollocks” rejoinder, blog and meme have arisen. There are regular calls to apply neuroscience in classrooms, for example, despite there being no meaningful knowledge base to apply. Similar pleas arise for the use of brain imaging in the courtroom, as if the underlying science to detect the presence (or absence) of lying were settled. It is not. And the public will have been done no favours if one form of voodoo science (lie detection polygraphy) is substituted by another. The background thinking, of course, has not been done: a science that revealed actual thoughts (as opposed to coloured blobs representing neural activity) would be a remarkable violation of our assumed rights to cognitive privacy. There are lots of sticky questions here for the willing (neuro-) ethicist to ponder.

But one useful effect of the popular focus on the brain is destigmatisation. Seeing conditions such as addiction as a brain and behaviour disorder rather than a moral failing facilitates understanding and treatment – although, ironically, the therapeutic potential of psychedelic drugs for treating depression is being obstructed by unhelpful rules based on inappropriate worries about addiction.

Adding to the ferment are new neurotechnologies. Some are potentially dangerous, such as the use of commercially bought or even home-made electrical devices known as transcranial direct current stimulators to “enhance” brain function, or the off-label experimentation with supposed cognitively enhancing drugs that some students indulge in during revision and exams. But other technologies are astounding: brain imaging, optogenetics (which uses light to control genetically modified neurons in living tissues) and deep-brain stimulation (which uses a surgically implanted device to treat neurological disorders with targeted electrical impulses) are just three examples.

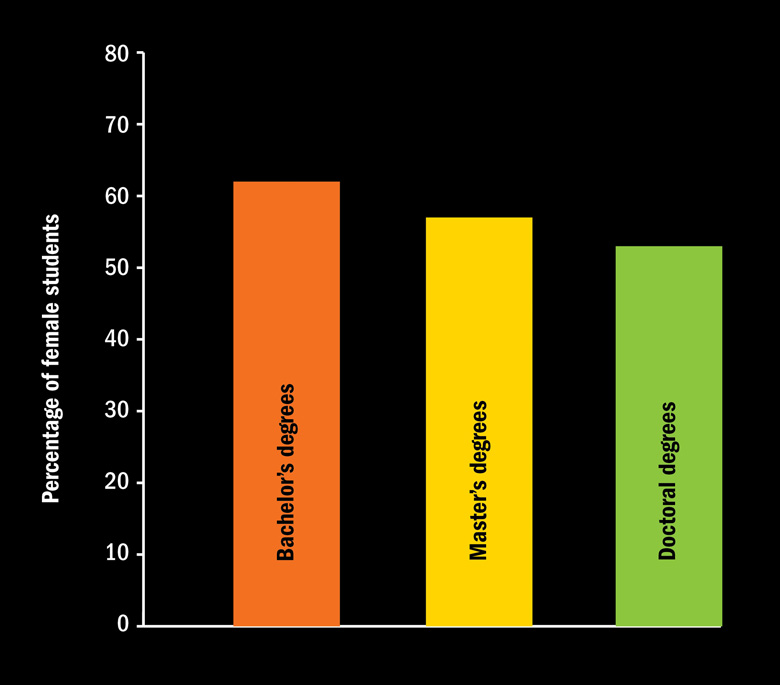

Average gender split of students taking neuroscience degrees in the US, 2008-2014

Source: US National Center for Education Statistics

But, with all new therapeutic treatments and devices, there is always a question of how scalable it is. A successful pharmacotherapy-based treatment for Alzheimer’s disease would scale easily, but deep-brain stimulation for drug-resistant Parkinson’s disease involves serious and very expensive neurosurgery. Of course, restoring individual productive potential should be important to the bean counters; restoring quality of life to sufferers is beyond value. But only about 100,000 patients have had this operation; scaling it to all sufferers worldwide is a pipe dream.

There are early interventions that could have great effect by addressing prevention rather than cure. Early childhood poverty, for instance, has enduring effects on brain structure and function: relieving it through income support, school meal provision and intensifying education has an upfront expense but a great downstream benefit in terms of productive lives supported. Similarly, aerobic exercise interventions promote brain and cognitive function, in addition to heart health. But only public intervention is going to promote such things because there is no money to be made in it for a pharmaceutical company.

And while we are (again) on the subject of money, it is worth reflecting that, notwithstanding the billion-euro and billion-dollar brain projects currently being carried out in Europe and the US (see Steven Rose’s piece), research into diseases such as dementia still receives much less funding than research into cancer.

Perhaps that balance could be redressed if there were one catch-all term for diseases and disorders of the brain, just as “cancer” designates a wide array of fundamental and applied research in cell biology, applied to a difficult patient condition.

It is not easy to think of something suitable. “Neurodegenerative disorders” doesn’t work, for example: it has too many syllables, and misses the many other brain disorders that are not neurodegenerative (such as attention deficit disorders or addictions). But here’s a thought: just as “malware” is used to indicate functional or structural problems with a given information technology device, perhaps we could use “malbrain” to mean something like “any disorder, dysfunction, structural problem or pathophysiological problem afflicting the brain, impairing normal neurological, psychological and psychiatric functioning of an individual”.

“Malbrain” has advantages as a word. It hasn’t been widely used before, it has few syllables and it doesn’t come with any stigma. Adopting it would not instantly erase neuroscience’s problems, but if it drew in more medical funding it could help the discipline further mature, opening up career options, enhancing the sense of common purpose among researchers and, hopefully, edging one or more of them closer to their Einstein moment.

Shane O’Mara is professor of experimental brain research at Trinity College Dublin and was director of the Trinity College Institute of Neuroscience from 2009 to 2016. His latest book, Why Torture Doesn’t Work: The Neuroscience of Interrogation, was published by Harvard University Press in 2015.

The technologies are there, the problems to be addressed are tempting and the theoretical issues are profound, touching some of the deepest questions about what it means to be human

Neuroscience has become one of the hottest fields in biology in the half-century since the term was coined by researchers at the Massachusetts Institute of Technology. With the mega-projects under way in the European Union and the US, the discipline can now qualify as a full-fledged Big Science.

As neuroscience has expanded, the “neuro” prefix has reached out far beyond its original terrain. For our new book, Hilary Rose and I counted no fewer than 50 instances, from neuroaesthetics to neurowar, by way of neurogastronomy and neuroepistemology. “Neuro” is intervening in the social and political, too. We have neuroeducation, neuromarketing and neurolaw. In public consciousness, the glowing, false-coloured magnetic resonance images of the brain, ostensibly locating the “seats” of memory, mathematical skill or even romantic love, have replaced DNA’s double helix as a guarantor of scientific certainty.

Meanwhile, the torrent of neuro-papers pouring out of labs overspills the proliferating specialist journals and threatens to take over much of Nature and Science. A wealth of new technologies has made it possible to address questions that were almost inconceivable to my generation of neuroscientists. When, as a postdoctoral researcher, I wanted to research the molecular processes that enable learning and underlie memory storage in the brain, my Nobelist superiors told me firmly that this was no fit or feasible subject for a biochemist to study. Today, memory is a mainstream field for molecular neurobiologists; it has yielded its own good-sized clutch of Nobel prizes, and ambitious neuroscientists are reaching out to claim the ultimate prize of reducing human consciousness to brain processes.

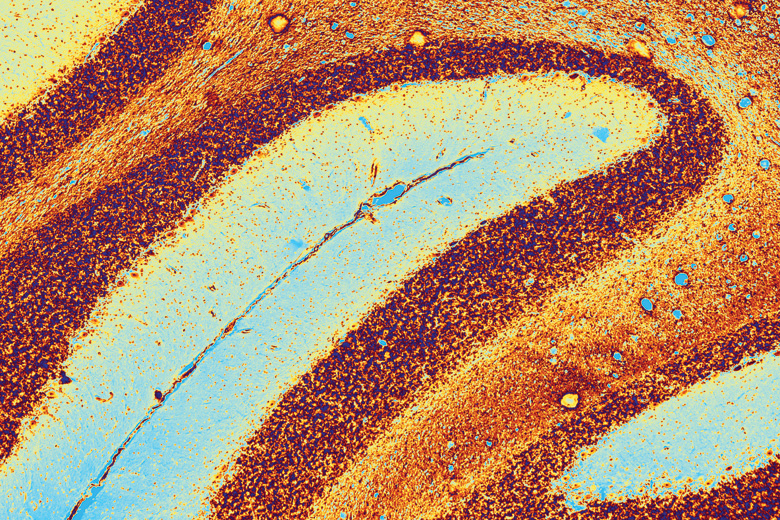

What has proved most productive has been the combination of new genetic and imaging techniques. The well-established methods of deleting or inserting specific genes into the developing mouse and exploring their effect on brain structure or behaviour have been superseded. It is now possible to place the modified genes into specific brain regions and to switch them on or off using electronically directed light, allowing researchers to activate or erase specific memories, for instance. The new imaging techniques are so powerful that they even make it possible to track the molecular events occurring in individual synapses – the junctions between nerve cells – as chemical signals pass across them.

But such technical and scientific triumphs may pale into insignificance when faced with the complexity of the brain. To see how far there is to go, consider the ostensible goal of the EU’s Human Brain Project: to model the human brain and all its connections in a computer and thereby develop new forms of “neuromorphic” computing. The scale of the task and the grandiosity of the ambition is indicated by the fact that in 2015, after six years of painstaking anatomic study, a team of US researchers published a complete map of a minuscule 1,500 cubic micrometres of the mouse brain – smaller than a grain of rice. And the mouse brain’s weight is about 1/3,000th of that of the human brain – although this didn’t inhibit the journal’s press release from suggesting that the map might reveal the origins of human mental diseases.

What might a complete model of the human brain reveal if one could be built? Potentially very little. For we still lack any overarching theory of how the brain works – not in the sense of its minute molecular mechanisms or physiological processes, but how brain processes relate to the actual experience of learning or remembering something, solving a maths problem or being in love. What is certain is that these experiences are not statically located in one brain site, but engage many regions, linked not just through anatomical connections but by the rhythmic firing of many neurons across many brain regions. It may be that, despite its imperialising claims, neuroscience lacks the appropriate tools to solve what neuroscientists and philosophers alike refer to as “the hard problem” of consciousness.

Perhaps of more general concern is the question of what neuroscience can contribute to the pressing problems of neurological disease and mental illness. Where biology is still unable to provide methods to regenerate severed spinal nerves to overcome paralysis, advances in ICT have come to the rescue, with the development of brain-computer interfaces and prostheses, offering hope of bypassing the severed nerves and restoring function. But despite detailed knowledge of the biochemistry and pathology underlying Alzheimer’s and other dementias, there are still only palliative treatments available.

Furthermore, despite the funds poured into the brain sciences by the pharmaceutical industry, there have been few advances in treating those with mental disorders, from depression to schizophrenia. The newer generations of antidepressant drugs, for example, work no better than those discovered or synthesised at the dawn of the psychopharmacological era in the 1960s. All are based on the proposition that the origins of these disorders lie in some malfunction of the processes by which neurons communicate with one another, primarily through chemical transmission across synapses. Plausible though this sounds, the continued failure to come up with better treatments has even led many biologically oriented psychiatrists to question the entire paradigm. In the US, the National Institutes of Health will no longer accept grant applications related to psychiatric disorders unless they can specify a clear hypothesis and a biological target. And I have lost count of the number of times in the past few decades that the discovery of a “gene for” schizophrenia has been loudly trumpeted, only to be quietly buried a few months later. A consequence has been that many pharmaceutical companies have rowed back from such research in favour of more tractable areas.

So how to sum up the state of neuroscience? If one sets aside general issues about the state of academia, such as job insecurity, the ferocious competition for grants and the increasingly authoritarian structure of universities, there has never been a more exciting time to be working in the field. The technologies are there, the problems waiting to be addressed are tempting and the theoretical issues are profound, touching both the minutiae of day-to-day life and some of the deepest questions about what it means to be human.

But, in approaching them, neuroscientists must learn some humility. Ours is not the only game in town. Philosophers, social scientists, writers and artists all have things of importance to say about the human condition. And neuroscientists who offer to use their science to educate the young or adjudicate morality in courts of law should proceed with utmost caution.

Steven Rose is emeritus professor of neuroscience at the Open University. Co‑written with Hilary Rose, his latest book, Can Neuroscience Change Our Minds?, was published by Polity Press in June.

The ‘black box’ that has squatted resolutely between genes and specific behaviours for such a long time is now being filled with real mechanistic insight

I was at a meeting recently where a speaker declared that “in the neurosciences, we have experienced the excitement of technical innovation, followed by inflated expectation, and now we have entered the trough of disappointment”. This depiction surprised me. Not just because it is a cliché, trotted out and used to describe the current status of topics as diverse as graphene and the Great British Bake Off, but also because it is palpably wrong.

Wanting to get to the root of the speaker’s confusion, I enquired over dinner if he was getting enough sleep. He said “tiredness stalks me like a harpy”. Interesting. The rationale for my question was a recent study showing that sleep-deprived individuals retain negative or neutral information, while readily forgetting information with a positive content. I concluded that sleep deprivation must be at the root of his distorted and overly negative views. As I articulated my counterarguments, his eyes glazed over and his head dipped. I rest my case.

I sleep well, and so remain immensely positive about the current state of neuroscience. But why? What positive knowledge and experiences have I retained and consolidated in my cortex? The first would be the immense culture change that many of us have experienced over the past 20 years. Traditionally, questions in neuroscience were addressed by a single laboratory using a limited repertoire of techniques. The work usually focused on a specific neuron, or neuronal circuit, located in a favoured animal model. Some individuals spent their entire working life hunched over “their” electrophysiological rig collecting data from “their” neuron. Just moving the electrode a few millimetres and “poaching” the neuron of another was considered to be the height of predatory aggression.

Most neuroscientists were more than aware of the limitations of this narrow approach. Ready for change and helped by surprisingly innovative funding initiatives, they found a new way of working – not just with other neuroscientists but across the spectrum of biomedical science. There are now countless examples of major questions being addressed by a critical mass of researchers sharing expertise and employing integrated approaches and communal facilities.

The result is that detailed information is emerging about the molecular and cellular basis of core functions of the brain, providing a real understanding of how the brain is involved in autonomic, endocrine, sensory, motor, emotional, cognitive and disease processes. All these developments, along with advances in bioinformatics and computational modelling, now place the neuroscience community in an unparalleled position to address the bigger picture of how the brain functions through its synchronised networks to produce both normal and abnormal behaviour. Furthermore, the expansion of experimental medicine is providing new and exciting research opportunities. The human genotype-to-phenotype link, studied through close cooperative contacts between clinical and non-clinical researchers, is an increasingly important driver in elucidating fundamental mechanisms.

True – neuroscientists have yet to answer the question of “what is consciousness?”, or to cure dementia or schizophrenia. We may not be able to do this for some time. But should these great and laudable goals be the only metrics against which progress is measured? If so, then spectacular successes will be overlooked. Across the neurosciences, important fundamental questions are being answered: not least, how genes give rise to specific behaviours. In my own field, the collective efforts of many individuals have begun to explain in considerable detail how circadian rhythms arise from an interaction between key “clock genes” and their protein products. We are also beginning to understand how multiple individual clock cells are able to coordinate their efforts to drive circadian rhythms in every aspect of physiology and behaviour, including the sleep/wake cycle. Attempts to understand how the eye detects the dawn/dusk cycle to align the molecular clockwork to the solar day led to the discovery of an entirely new class of light-sensing system within the retina. Efforts to explain why some people are morning types (larks) while others are evening types (owls) have been linked directly to subtle changes in specific clock genes.

I could go on and on, and I know colleagues in other areas of neuroscience could cite analogous triumphs. For some balance, I am keen to highlight psychiatry. It has long been known that conditions such as schizophrenia have a major genetic component, but identifying the specific genes involved has been a significant problem, and at one stage was thought to be an intractable one. However, very recent genome-wide association studies have provided real insight. More than 100 gene loci have now been linked with schizophrenia risk, identifying for the first time “genes for schizophrenia”. Furthermore, many of these genes have clear therapeutic potential, both as drug targets and in identifying environmental factors that influence the development of the condition. The point I am trying to make is that the “black box” that has squatted resolutely between genes and specific behaviours for such a long time is now being filled with real mechanistic insight.

I will not pretend that everything is perfect. We do face significant problems, not least how we fund and recognise the efforts of early career neuroscientists, who are often obliged to work in very large teams, making individual achievements hard to highlight. However, I absolutely refuse to support the notion that neuroscience now resides within a “trough of disappointment”. The immense progress and successes that have been and are being achieved across the broad spectrum of the discipline should be recognised and celebrated. The state of neuroscience is robust, and we are genuinely shuffling forward in our understanding of the most complicated structure in the known universe: the human brain.

Russell G. Foster is professor of circadian neuroscience at the University of Oxford.

POSTSCRIPT:

Print headline: Have our minds been blown yet?

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber?