Five years ago, the “ground opened up” beneath Richard Mann. Then a junior postdoctoral researcher at Uppsala University in Sweden, he was in the middle of a two-month visit to the University of Sydney in Australia and was due to give a seminar about a research paper that he had published recently. The paper was by far the most significant of his fledgling career, and the culmination of 18 months of hard work.

Three days before the presentation, Mann – who is now a university academic fellow in the School of Mathematics at the University of Leeds – received an email from a former colleague with whom he had shared his data. It said that there appeared to be a problem with the analysis of his results.

A few frantic minutes of checking confirmed his friend’s suspicion: the analysis program had picked up only a fraction of the data that had been collected. “It felt like almost everything I had done in my entire postdoc had fallen apart,” Mann tells Times Higher Education.

Mann is not the first researcher to make a mistake, and he certainly will not be the last. Mistakes happen in science, as they do in all professions. But owning up to scientific mistakes can be particularly difficult given the job description (to describe the world accurately), the extent to which professional prestige is often bound up with a researcher’s sense of self-worth, the key role that papers play in building scientific reputations and the enormous difficulty, from the outside, of distinguishing cock-up from something more sinister.

Some mistakes that do not affect the conclusions of a journal article can be resolved with a correction: an addition that details the error, puts it right and discusses the implications for the research’s findings. But if the mistake undermines the conclusions of the research, the journal or authors are typically expected to retract the paper. The same process is used to expunge from the literature papers whose mistakes derive from research misconduct, so there is often a significant stigma attached to retracting a paper even if the mistake is an honest one.

When he gave his seminar, Mann marked the slides displaying his questionable results with the words “caution, possibly invalid”. But he was still not convinced that a full retraction of his paper, published in Plos Computational Biology, was necessary, and he spent the next few weeks debating whether he could simply correct his mistake with a new analysis rather than retract the paper.

But after about a month, he came to see that a full retraction was the better option as it was going to take him at least six months to wade through the mess that the faulty analysis had created. However, it had occurred to him that there was a third option: to keep quiet about his mistake and hope that no one noticed it.

After numerous sleepless nights grappling with the ethics of such silence, he eventually plumped for retraction. And looking back, it is easy to say that he made the right choice, he remarks. “But I would be amazed if people in that situation genuinely do not have thoughts about [keeping quiet]. I had first, second and third thoughts.” It was his longing to be able to sleep properly again that convinced him to stay on the ethical path, he adds.

An anonymous straw poll conducted for this article by Times Higher Education suggests that Mann’s hunch may be accurate. Scientists were asked what they would do if they found a mistake that seriously undermined the conclusions of a paper they had published in a high-impact journal. Of the 220 self-selecting respondents, 5 per cent said that they would do nothing and hope that no one noticed their error. A further 9 per cent would take no action unless their mistake was pointed out to them by someone else.

Peter Lawrence, Medical Research Council emeritus scientist in the department of zoology at the University of Cambridge, says that those figures probably underestimate the true proportion of scientists who would not confess to a mistake unless they were forced to.

“What you see [with this poll] is only a heads-up as to how corrupted the practice of science has become…The temptation to do nothing is high,” he says.

Behind the problem, Lawrence believes, is the pressure on researchers to secure the high-impact papers that lead to jobs and funding. Some researchers, he says, are content to submit to top journals such as Nature, Science and Cell papers that are misleading or littered with errors. “There is no reward for being honest about one’s results,” he says.

But there are at least some scientists who are still prepared to pursue the truth at all costs. Pamela Ronald, professor in plant pathology and in the Genome Center at the University of California, Davis, is one of them.

In 2012, two new postdoctoral researchers joined her laboratory. She asked them, as usual, to repeat some of the lab’s previous experiments, both to confirm the results and to help the newcomers get up to speed with the techniques her group uses. When the pair were unable to replicate findings published in Science in 2009 and Plos One in 2011, Ronald initially brushed this off as a result of inexperience or a failure to use the right number of controls in the experiments. But the postdocs soon convinced her that something was genuinely very wrong.

“It was terrifying. I was very distressed. What you want to do is crawl under the bed and never come out again,” she recalls.

But rather than doing that, she notified the journal editors about the potential that her work was faulty, and set about investigating what had gone wrong. During the ensuing 18 months of careful testing, during which, in terms of man hours, she dedicated the equivalent of two full-time researchers to the task, Ronald felt that her life was on hold. It transpired that one of the problems was what she describes as a classical microbiological error: her staff had been exchanging strains of a microbe without verifying them each time, so the strains had become mixed. In addition, an investigative technique that the group had relied on turned out not to be very robust. Hence, retraction was ultimately unavoidable.

She admits that she was unusually lucky to have had enough funding to pursue what she refers to as “the clean-up operation”. “This is why a lot of garbage ends up staying in the literature…Most labs don’t have funds to double-check their results,” she says. Nor, it seems, do those following up results always take the trouble to check them first. Indeed, Ronald’s faulty work led to a spate of copycat papers. One rival group heard about the work that was eventually published in the Plos One paper in an earlier conference presentation and scooped Ronald on it – published it ahead of her lab – presumably having first rushed through a genuine process of experimental confirmation.

Another group claimed to have replicated the work in the papers. This caused Ronald to wonder whether her lab had been right all along – until she double-checked the microbe strains used by the copycat lab and found that they too were faulty. That group eventually retracted its paper just before it was due to go to press, but the group that scooped her has yet to admit to any mistakes, and its paper stands uncorrected.

Coming clean: reporting errors

What would you do if you found a mistake in your work?

Exactly how many mistakes are in the scientific record is unknown. Published studies estimate that mistakes account for between 20 per cent and 30 per cent of all retractions in the biomedical and life sciences. However, only about 0.02 per cent of papers are ever retracted. The figure is rising, but it is unclear whether this reflects greater vigilance or a higher incidence of error and misconduct. Daniele Fanelli, a senior research scientist at Stanford University and an expert in research ethics, notes that even just 20 years ago most journals did not have retraction policies. A third of high-impact biomedical journals still lack them, he adds.

Research published in 2015 about the psychological literature suggests that one in eight papers has some inconsistency in reported statistics, but these are only the mistakes that are caught: many more may slip under the radar. According to Fanelli, the likelihood of error varies according to field, journal, type of data and even researchers’ country of origin. The last point, he says, is “an uncomfortable fact that is too often glossed over; emerging countries like China and India are producing lots of good research, but also seem to be at higher risk from errors and, possibly, misconduct”.

David Resnik, a bioethicist at the US National Institute of Environmental Health Sciences, says that the rise in retractions indicates that people are realising the importance of doing something about mistakes and misconduct. But he thinks that the increasing complexity of research also makes mistakes more likely. The huge datasets that many researchers work with and the different statistical techniques available to analyse them can throw up plentiful opportunities for error, he says.

This complexity also further blurs the line between mistake and misconduct, he adds. Researchers might choose to use a particular statistical technique for analysis because it shows a significance in the data that a more appropriate technique might not, for example. But it is equally possible that the inappropriate technique was selected simply because the researchers were unfamiliar with the alternatives.

Given the impossibility of knowing whether a researcher did something deliberately or accidentally, “people fear that their mistakes will be interpreted as misconduct”, Resnik says. This can stop them from coming forward when they realise they have made a mistake.

Red flags: reporting others' errors

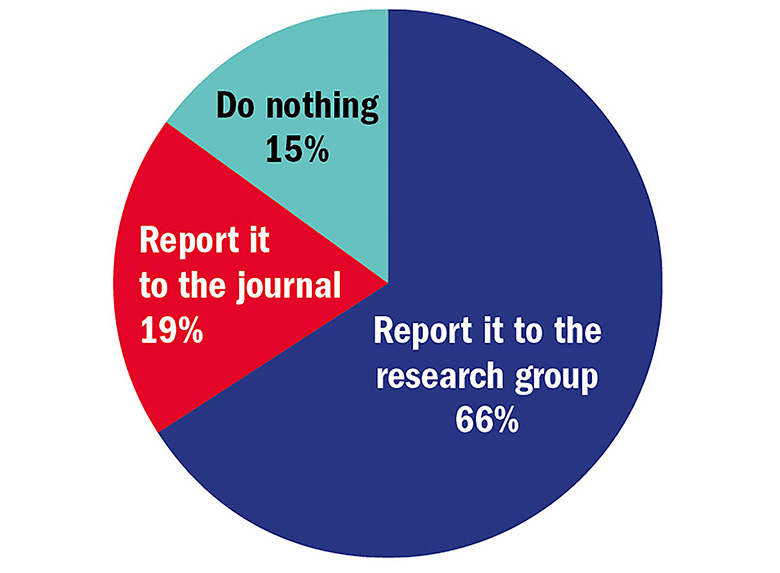

What would you do if you found a serious error in someone else's work?

Even when researchers do hold their hands up, amending the literature is not always straightforward if their co-authors are unwilling to cooperate because many journals require all authors to agree before they will correct or retract a paper. Elizabeth Moylan, senior editor for research integrity at open access publisher BioMed Central, says that journal editors generally refer to the guidelines on retractions published in 2009 by the UK-based Committee on Publication Ethics (Cope): a membership organisation that offers ethical advice to editors and publishers. The guidelines state that an editor should try to speak to the authors to ascertain what happened. They might then approach the universities involved, too. Many institutions now have dedicated research integrity officers, who can help to get authors talking to each other or even investigate what has happened by scrutinising emails and other documents, Moylan explains.

“It is not really our job to investigate,” she says, adding that publishers lack the tools and the powers to do so. But in some instances, a journal may choose unilaterally to add an expression of concern to an article under investigation so that readers can make their own judgements.

Publishers are sometimes criticised for the length of time it can take for them to act on concerns, given the potential for faulty research to lead others up blind alleys, wasting their time and funding. But Chris Graf, co-vice-chair of Cope, says that it is overly simplistic to assign all the blame to journals when investigating a concern can be incredibly complex. “There are many players involved, and it doesn’t happen quickly,” he says. “We want proper process to be followed and for there to be due diligence.”

One researcher, who spoke to THE on condition of anonymity, learned how important this due diligence can be when a critic spotted errors in one of his papers. Because the errors did not significantly alter his conclusions, he promptly addressed them with a correction. But the critic went on file to a research misconduct claim against him, which his university was obliged to investigate. The academic had to endure what he describes as a terrible, anxiety-ridden six months as the investigation unfolded.

“Even though I knew that I didn’t do anything wrong, going through that process, I imagine, is similar to [facing] criminal charges,” he says. He attributes a significant drop in his productivity during that period to the stress of the investigation and the sheer amount of time it took to compile the required documentation and to answer questions.

The university found no evidence of misconduct, and the academic says that he is so far not aware of any repercussions for his scholarly reputation.

Some publishers are experimenting with new ways to curate the scientific literature to help alleviate the stigma of retractions. Medical science publisher the JAMA Network, for example, now offers authors the chance to retract and replace articles in one go. This is designed for authors who have published work with an honest but pervasive error that, when corrected, results in a major change in the direction or significance of the interpretation of the results and the paper’s conclusions.

So far, the retract and replace mechanism has been used four times across JAMA’s 12 journals since it was introduced in 2015. According to Annette Flanagin, executive managing editor and vice-president of editorial operations at JAMA and the JAMA Network, authors appreciate the opportunity to address mistakes this way and have been forthcoming in doing so.

However, says David Allison, associate dean for research and science in the School of Public Health at the University of Alabama at Birmingham, many journals are still dragging their feet when it comes to improving correction mechanisms. In 2014, he and his colleagues embarked on an impromptu exercise, which eventually stretched to 18 months, to correct the errors they came across while compiling their weekly newsletter about developments in their field of obesity, nutrition and energetics.

The team identified more than two dozen papers that needed correcting and, according to Allison, the scale of the problem they discovered was such that they could easily have spent the rest of their careers working full-time on it. What made it so time-consuming, he says, was the need to keep chasing many of the editors of the journals where the faulty papers appeared. Although some were helpful and proactive, others took more than a year to reply to him and some never responded to his concerns at all, he says.

He attributes some of their tardiness to the need to be fair to authors and to follow due process. But staffing also plays a part, he says – especially when the editor is also a full-time academic. And some editors without the support of big publishing houses with expertise in this area may simply lack good judgement when it comes to dealing with errors. Allison himself has served on the editorial boards of 20 journals but has never received any formal training.

“Another issue is fear of retribution. There has been a lot of suing lately,” Allison adds. For instance, in 2014, Guangwen Tang, who at the time worked for Tufts University, sought an injunction preventing The American Journal of Clinical Nutrition from retracting a paper on which she was senior author after an investigation by Tufts had found that the researchers had breached ethical regulations. Her argument was that the retraction would constitute defamation. However, the court ruled in the journal’s favour, and the paper was retracted in 2015.

Even for those researchers who do not call in the lawyers, errors can have financial costs. Mann had to stump up an additional article processing charge of $2,500 (£2,000) to republish his open-access paper with the new, bug-free analysis. He was able to pay this, he says, only because his boss was unusually well funded.

Overall, however, he counts himself extremely lucky to have come out of his error discovery nightmare relatively unscathed, with apparently undiminished employment prospects. He believes that this is because he got a fair research wind afterwards, and had a boss who liked him and had money to keep him on. “A large part of the reason that I am able to talk about [the experience] so frankly now is that it didn’t destroy my career,” he says.

By contrast, Ronald faced a lot of negativity when dealing with her mistakes. “It definitely hurts your reputation,” she says. Colleagues told her that she should never be promoted again, and her nominations for several prizes were withdrawn. But the biggest “kick in the stomach” was when a colleague of 25 years – someone she considered a friend – told her that it would be 10 years before anyone trusted her again.

“It is going to be really hard to shake that culture in science: that a mistake is still a sin,” she says.

Who would set the record straight? Times Higher Education Poll

What would you do if you realised that you had made a mistake in your work? Would you keep quiet in the hope that no one noticed so you could avoid the potential reputational fallout from a retracted paper? Or would you confess all straight away? Times Higher Education conducted an anonymous online survey to get a better idea of how many academics fall into each camp.

Of the 220 respondents, 86 per cent said that they would immediately report a serious error that affected the conclusions of work they had published in a high-impact journal. Just 5 per cent would do nothing, and 9 per cent would do nothing unless someone pointed out their error.

For research published in a less prestigious journal, slightly fewer respondents – 83 per cent – would report a serious mistake immediately. Six per cent would do nothing, and 11 per cent would keep quiet unless the error was flagged up by another researcher.

Survey respondents would be far less forthcoming about minor mistakes that did not significantly affect their findings. Just 41 per cent would report such an error immediately to the journal, and almost a quarter would turn a blind eye.

Those who took part in the poll seemed much keener to point out slip-ups by their peers. Two-thirds would report a serious error that they found in another group’s paper to the researchers that authored it, and a fifth would tell the journal immediately.

POSTSCRIPT:

Print headline: To err is human; to admit it, trying

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber? Login