After a long winter of consultation, even the most battle-hardened of higher education policy wonks could be forgiven some weariness at the prospect of yet another review. But a fresh look at the research excellence framework by Lord Stern and colleagues is a welcome opportunity to settle an argument that has been festering for too long.

Assuming that we want to retain a dual-support funding system – and the government has given every indication that it does – how do we allocate the quality-related portion of that funding (now about £1.6 billion each year) in a way that is accountable, efficient and rewards high-quality, high-impact research?

Across the sector, the REF has become a condensation point for wider concern about the burden of audit, management and bureaucracy on academic life. An exaggerated debate has developed, in which the REF is frequently discussed in rather hysterical terms as “a bloated boondoggle”, a “Frankenstein monster” or “a Minotaur that must be appeased by bloody sacrifices”. It is supposedly responsible for a “blackmail culture”, a “fever” and a “toxic miasma” on campus.

It is no surprise that these messages have filtered up the system to ministers, and created a perception that the REF is a problem that needs fixing. So a cool, hard and evidence-informed look at the costs and benefits of the exercise – and a consideration of alternative approaches – is welcome. The Stern Review should also fill in the remaining pieces of the research policy jigsaw, after the higher education Green Paper and Nurse Review of the research councils left several questions unresolved.

As Jonathan Grant pointed out in Times Higher Education earlier this month, even at an estimated cost of £246 million in 2014, the REF is actually a considerably more efficient means of distributing research funding than the research council grants system. The discrepancy will only be exacerbated by the fact that the declining value of the science budget has pushed success rates in some research council funding schemes to below 10 per cent, with the result that nine in 10 applicants are investing large amounts of time and effort in unsuccessful proposals.

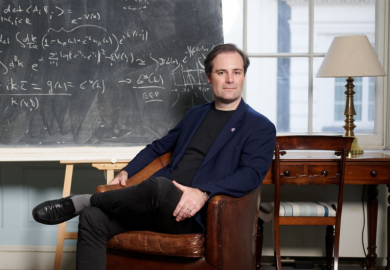

The thorough series of evaluations of REF 2014 carried out last year will provide a solid evidence base on which to build. In addition, my independent review of metrics, published as The Metric Tide in July 2015, took an in-depth look at the role that quantitative data could play.

REF sceptics often look to metrics as the answer. This is reflected in the Stern Review’s terms of reference, which call for “a simpler, lighter-touch method of research assessment, that more effectively uses data and metrics while retaining the benefits of peer review”.

But as we’re seeing in debates over metrics for teaching assessment, the seductive promise of big data doesn’t always hold up under scrutiny. My review group approached this issue with an entirely open mind, but after spending 15 months analysing available metrics, performing correlation analyses and consulting widely, we were forced to conclude that “no set of numbers, however broad, is likely to be able to capture the multifaceted and nuanced judgements on the quality of research outputs that the REF process currently provides”.

If all the REF is intended to do is provide an allocation mechanism for quality-related funding then it’s perfectly possible to devise an algorithmic solution. But the exercise, as it has evolved through successive cycles, does a lot more than this. And compared with the existing process, coverage and robustness across disciplines remains the biggest challenge for metrics. Whatever Elsevier and others with metric services to sell may say, there are serious gaps, especially across the arts, humanities and social sciences.

For instance, my review highlighted that there are a handful of arts-based higher education institutions for which journal articles were a minor component of their 2014 REF submission. And while the percentage of non-journal outputs may be much smaller for larger research-intensives (24 per cent at the University of Oxford; 15 per cent at University College London) their sheer size means a great deal of excellent research would not be counted if we switched to a purely citation-based approach.

Regarding the impact element of the REF, the problem is even greater. As both my review and a separate evaluation by King’s College London and Digital Science show, we simply don’t have reliable quantitative indicators that can capture the richness and diversity of impacts depicted in the near 7,000 REF case studies.

So while the Stern Review’s aspiration to reduce burden and incorporate quantitative data more intensively in research evaluation is entirely correct, metrics provide few easy wins. And, as Grant also pointed out, the financial savings from a switch to metrics are likely to be modest at best.

Instead of diving headlong into the metric tide, I continue to think that the focus for the next REF cycle should be on improving the robustness, coverage and interoperability of the datasets that we have, and applying them responsibly in the management of the research system. This need for a framework of “responsible metrics” for research was the headline message of The Metric Tide, and I hope Lord Stern and colleagues will consider it seriously.

James Wilsdon is professor of research policy and director of impact and engagement in the Faculty of Social Sciences at the University of Sheffield. An updated book edition of The Metric Tide, the report of his independent metrics review, is being published by Sage Publishing this week.

POSTSCRIPT:

Print headline: Nip and tuck

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber?