AI is already inside the black box of peer review, often without fanfare. Surveys suggest that a majority of reviewers are now using AI tools in some form, often in tension with existing guidance. Review-bots promise to read, summarise and critique papers. And the invitation seems irresistible.

Peer review is chronically over‑stretched. Submissions have surged, but qualified reviewers have not. Those that there are find themselves inundated with review requests and pleas for reviews already promised. For editors, too, AI appears to fix the scarcity problem, offering faster triage, prompter reports, and cheaper and more consistent verdicts.

But to treat peer review as a throughput problem is to misunderstand what is at stake. Review is not simply a production stage in the research pipeline; it is one of the few remaining spaces where the scientific community talks to itself.

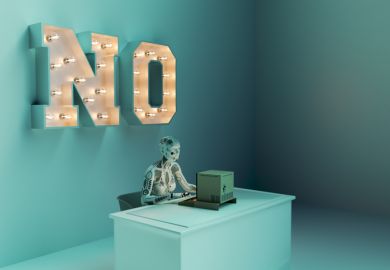

Once we invite machines to act as reviewers, we begin to slide from seeing peer review as a human conversation to treating it as a technical service. But a peer is not merely an error‑detector. A peer is someone who inhabits the same argumentative world as the author, who recognises the paper as situated in a field’s live disputes, fashions and vulnerabilities. The reviewer’s exasperating “I see what you’re trying to do, but…” is not a bug in the system; it is the system. It signals that another human intelligence has wrestled with the work long enough to care.

AI tools, by contrast, do not wrestle; they process. They can mimic the surface features of a referee’s report. But this is “understanding” without understanding. Systems built on large language models statistically stitch together language tokens coherently, but that doesn’t imply any understanding. In fact, much of it is regurgitation of the material the AI was trained on.

Unlike human reviewers, who care about knowledge, LLMs have no stake in whether a finding is true, a method convincing or a theory illuminating. They have not helped build the edifice of knowledge. They belong to no epistemic community. They cannot be embarrassed to be wrong.

This matters because science is not only a body of results but a culture of argument. We like to describe progress in terms of replication crises, effect sizes and citation curves, but underneath sits something older and more fragile: the expectation that claims will be exposed to the judgement of one’s peers, who in turn are willing to be persuaded, or not. Yes, that dialectical back‑and‑forth is messy, slow and emotionally taxing. Ego or personal gain can bias judgement and corrupt behaviour. But it does something no automated system can.

The current enthusiasm for automated review is of a piece with the wider industrialisation (commercialisation) of academic life. We already manage research through dashboards, benchmarks and performance indicators. AI is just another tool for squeezing friction out of workflows, another promise of “efficiency gains” in grant allocation or editorial decisions.

Campus spotlight guide: GenAI as a research assistant

But friction is not always waste. The reviewer who is late because they are genuinely unsure, or who resists a fashionable claim until the authors have done more work, embodies a kind of care that metrics struggle to capture.

Proponents will respond that AI is not meant to replace humans but to support them, and that judicious use can actually free reviewers to focus on higher‑order judgement. There is some truth in this. Yet the boundary between assistance and authorship is thin. Once an editor starts relying on an AI‑generated score, or a reviewer quietly pastes an auto‑drafted report with minimal edits, it becomes difficult to say where human responsibility ends, and machine suggestion begins.

That ambiguity leads directly to a political problem: accountability. Human reviewers, for all their biases and blind spots, can be questioned and persuaded. What does it mean to persuade a bot? Would an author be as invested in doing so? And if they weren’t, would peer review’s ability to draw out improvements to manuscripts start to wither?

Further, if a bot’s output in effect shapes the fate of a manuscript, who is answerable for that decision: the editor, the publisher, the vendor, the training‑data ghosts of past reviewers? And if reviewers lean heavily on AI, why should researchers not use AI to write manuscripts – taking humans out of the publication loop entirely?

When nobody quite owns the judgement, it becomes dangerously easy for everyone to disclaim responsibility. Yet science is undergirded by personal responsibility of authorship.

None of this is to romanticise the status quo. Peer review is imperfect, sometimes exclusionary, occasionally petty. We have all seen nonsensical reports and capricious delays. But the answer to those failings is to invest more in the human practice – through training, recognition and realistic expectation – not to hollow it out.

Policymakers, funders and publishers should resist the urge to treat AI as an inevitability that must simply be “managed”. It is a choice, and like any infrastructural choice it reveals what we value.

If we prize speed and scale above all else, then of course we will automate judgement wherever we can and reconcile ourselves to a thinner notion of what counts as understanding. If, instead, we still believe that science is a vocation grounded in argument, curiosity and care, then we should be honest about the limits of delegation.

Let AI help with the paperwork if it must. But the act of saying “this work belongs in our shared conversation” – or not – is something only a peer can do.

Akhil Bhardwaj is an associate professor at the University of Bath School of Management.

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber?