How to strengthen your metacognitive skills to collaborate effectively with AI

You may also like

Ever found yourself repeatedly rephrasing a question to a generative AI model, such as ChatGPT, despite continuing unsatisfactory answers? Perhaps you’re subconsciously using the same mental shortcuts you rely on in human interactions? AI complements our thinking but isn’t the same as human intelligence; we can’t just rely on it and switch on our cognitive autopilot. In a prologue to this article, we explained why metacognition should be especially important when collaborating with AI. Read on to explore how to strengthen your metacognitive skills and avoid falling into such cognitive traps.

Metacognition involves the ability to step outside of our current cognitive focus and think about our thinking. It involves the use of knowledge (about one’s own skills and the task one is working on) and mental regulation (monitoring what one is doing, whether these strategies are working and then using this information to adjust what one does going forwards).

- Collaborating with artificial intelligence? Use your metacognitive skills

- Breaking academic barriers: language models and the future of search

- Mastering generative AI: crafting reusable prompts for effective learning design

Metacognition in practice

In ordinary terms, metacognition is just thinking about our thinking. However, there are four steps involved when metacognition is used to improve learning and performance. In the context of collaborating with AI, they involve:

- Awareness: Recognising when one’s strengths, weaknesses and cognitive biases are influencing the way in which we communicate with and respond to AI output.

- Planning: Leveraging this self-awareness to optimally divide tasks between oneself and the AI to meet objectives.

- Monitoring: While working, monitoring both your progress and the AI’s progress towards objectives.

- Evaluation: After the task, reviewing what went well and what did not in order to refine your approach to future collaboration with AI.

Improving awareness

Most people engage in metacognitive processes, but collaborating with AI necessitates a sharpened awareness of one’s strengths and vulnerabilities. First, we should think about where the AI can enhance the task’s performance while also identifying when our own human intelligence is essential to achieve optimal task performance.

It also helps to know something about the heuristics or mental shortcuts that our brains use and how these influence our decisions. When applied inappropriately, these heuristics turn into biases because they oversimplify complex situations, leading to errors in decision-making. Examples of such biases include:

Anchoring bias: Giving more weight to the first piece of information we receive. Users might allow the response from the AI to limit the range of information they consider, thus overlooking an optimal solution.

Confirmation bias: Seeking information that confirms our beliefs without looking for alternative information. AI tools draw from a massive pool of data. If we allow our bias to direct the AI (“find me evidence that xxxx”), it will probably find information to confirm our beliefs – even if there is far more information to support an alternative belief.

Halo effect: Using one positive attribute or experience to infer that another will also be positive. Remember that, unlike humans, AI can perform extremely well on tasks they have been trained for but very poorly on others.

Sunk-cost fallacy: Persisting with an existing strategy because of the time, effort or money invested. Since the AI is trained on specific data, it might not be able to provide the exact solution you are pursuing (for example, a particular way of structuring your code). Instead of trying a new tool, you think: “I’ve put in so much time, I should keep going.”

Sometimes, we might mix in biases like not trusting automated choices (“algorithm aversion”) or thinking we know more than we do (“overconfidence bias”). These can make us miss out on valuable AI insights. But here is the good news: there are strategies that we can use to help us minimise the impact of these biases.

Planning, monitoring and evaluation

One of the most effective ways we can foster metacognitive thinking is by using prompts. While working with AI, we should be prompting ourselves to plan, monitor and evaluate, asking questions such as:

Planning: What is it that I am trying to achieve on this task? What types of errors do I need to look out for when using this AI tool on this type of task?

Monitoring: Am I making progress towards our objectives? What biases might be influencing my judgement?

Evaluation: Could I have made better use of the AI on the task? What should I do differently next time?

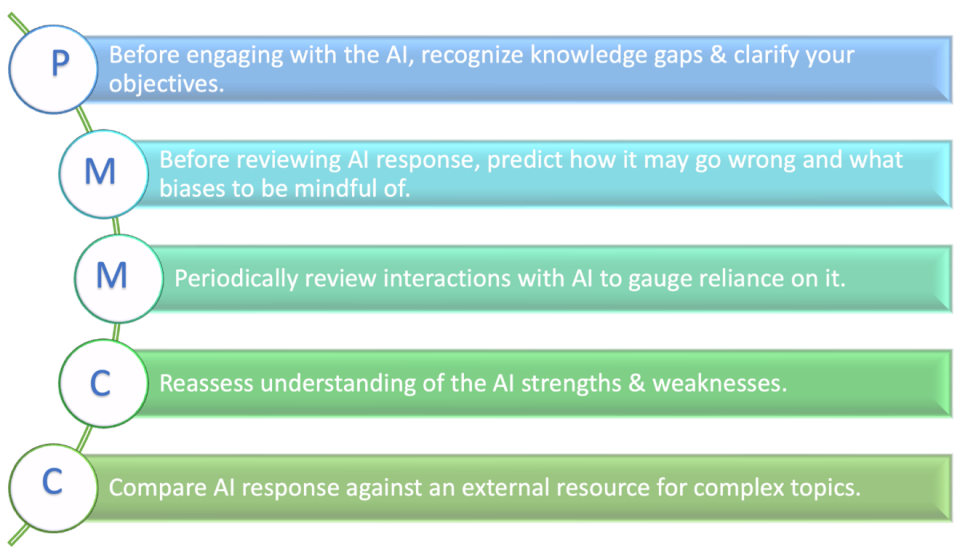

Designing computer applications that show prompts during tasks is efficient, and they can automatically display reminders to monitor progress as well. But just keeping track isn’t enough; knowing how sure you are about your knowledge of strengths and weaknesses is equally important. Sometimes we think we know more than we do, and sometimes less. This can make it hard to track how we’re really doing. Studies show that tailored planning (P), monitoring (M) and control (C) strategies (see figure below) improve learning and performance on complex tasks. Also, feedback that compares how sure you felt after doing something to how you really did helps track progress accurately.

Figure: Metacognitive strategy instructions for AI collaboration

Mindfulness

While feedback can help us to monitor progress, understanding the inner workings of our brain can also help us control our thinking. Our brain works in two modes. In one mode, we are cruising on autopilot; in the other, we are more focused and aware. When teaming up with AI, it is tempting to stay in cruise mode and lean on the AI. But the key to effective collaboration is the ability to complement one another through awareness of each other’s strengths and weaknesses. A technique that can train our brain to switch from autopilot mode to a state of awareness is mindfulness. Mindfulness helps us move from just reacting to being aware of our thoughts, thereby promoting metacognition. At its core, mindfulness involves focusing on a single object or thought and, if your mind wanders, slowly redirecting it back.

To conclude, let’s wrap up with a few simple habits to adopt when we begin interacting with generative AI.

Start by making a simple plan about what you and the AI are good at – nothing too intricate. While doing the task, pause often to notice what cognitive biases you’ve been relying upon and whether there might be an alternative approach. Finally, try practising mindfulness daily. It is all about consistent practice, even if it is just for a small duration every day. The AI revolution is here, but we need to embrace it mindfully.

Claire Mason is a principal research scientist and leads the technology and work team at the Commonwealth Scientific and Industrial Research Organisation (CSIRO).

Sidra is a postdoctoral fellow at the CSIRO, specialising in collaborative intelligence. Her work centres on optimising collaboration between artificial and human intelligence with a focus on unlocking the full potential of the human mind.

If you would like advice and insight from academics and university staff delivered direct to your inbox each week, sign up for the Campus newsletter.