How can generative AI intersect with Bloom’s taxonomy?

You may also like

Two years ago, we were invited to write a book chapter about integrating artificial intelligence (AI) in management learning for the book Future of Management Education. At the time our understanding of AI was limited to its potential as a support tool for teaching and learning (T&L), to provide personalised learning for students and to reduce onerous tasks for educators. Similar to Harin Sellahewa and Michael Webb, we believed AI would be something that would be willingly brought into HE to enable inclusive learning experiences.

Little did we know that generative AI would soon gatecrash our world, disrupting our T&L space and dividing the academic community into groups comparable to Everett M. Rogers’ technology adoption cycle published in Diffusion of Innovations. Most recently, the Chronicle of Higher Education published an insightful article capturing the views of many academics, titled Caught Off Guard by AI, which highlights the confusion and helplessness among academics and scepticism over the purpose and future of higher education. Despite the diversity of views across the sector, academics are desperate to receive clear and practical advice and guidance from their own institutions on how to manage this complex situation. But HEIs are unsure themselves and look for external support.

- Collection: AI transformers like ChatGPT are here, so what next?

- ChatGPT and generative AI: 25 applications in teaching and assessment

- Adapt, evolve, elevate: ChatGPT is calling for interdisciplinary action

Before we share our approach to using AI in T&L, we would like to make it clear that this article did not use generative AI, and we take an objective perspective towards the role of AI in HE. We are neither innovators nor laggards. We are simply curious academics with a passion for advancing T&L and our profession.

The way AI has been portrayed in the HE landscape over the past few months emphasises the perceived usefulness and ease of use of generative AI, such as ChatGPT, predominantly in the context of assessment creation. Most debates are anchored around the issues of academic integrity and are therefore projecting fears of quality assurance. However, AI in the context of assessment and academic integrity is just one small piece in a big puzzle. From a T&L perspective, the purpose of HE is traditionally viewed as supporting students’ development of higher-order thinking skills through knowledge acquisition, knowledge-sharing and, most importantly, knowledge creation.

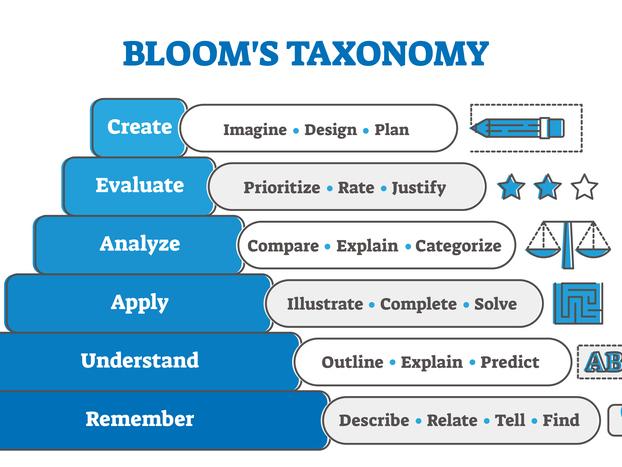

Underpinning these practices are cognitive processes: lower-order to higher-order thinking skills, starting with remembering and building through understanding, applying, analysing and evaluating, before reaching the pinnacle of educational learning – creating. These are well summarised by Bloom’s taxonomy (and revised versions such as Krathwohl) and are often depicted as a pyramid. This concept of lower- to higher-order thinking skills heavily influences and governs the way we design, teach and assess, and is implicitly embedded in qualification frameworks, university-level grade descriptors and learning outcomes.

The conundrum is that we have become so reliant and dependent on Bloom’s taxonomy that it is almost impossible to think differently about how to design assessments and T&L. We also came to believe that creation of knowledge is not possible without going through these cognitive processes. In contrast, AI can create content of many types almost instantly. Plus, generative AI draws from a vast amount of information without feeling overwhelmed or stifled by capacity limitations. Like in a video game, we can just jump five levels. No need to remember, understand, apply, analyse or evaluate information.

Thus, AI could be a welcome friend to humans in overcoming information paralysis and cognitive overload. Yet sometimes the information might not be correct, it often appears formulaic and lacks detail. This makes it tempting (and easy) to harness this argument to simply disregard the use of generative AI in HE. But knowing that it essentially reduces the need for cognitive thinking processes to arrive at a believable output begs the question of how we can use this insight to evolve from our current understanding of learning and assessment design to one that would incorporate new technologies? The disruption has offered an opportunity for change, so perhaps this beast is a blessing in disguise.

Think about Bloom’s taxonomy: wide at the base (remember) and reaching a small pinnacle at the top (create). The base represents the lower-order thinking skill of remember, understand and apply, whereas analyse, evaluate and create represent higher-order thinking skills, with create being the sometimes-elusive goal in HE. However, when utilising generative AI, the pyramid flips. Creation of knowledge is now available to the masses with the correct input of a simple prompt. Meanwhile, the small pinnacle now demonstrates the skill of remembering – the recall and sharing of knowledge, acquired only by those who invest time and commitment to understand information created by generative AI through evaluation, analysis and application. In essence, when considering the impact of generative AI, we suggest that the common understanding of higher-order and lower-order thinking skills has literally and metaphorically been turned upside down.

Bloom’s taxonomy remains a respected method in teaching, learning and assessment design. We simply offer that when integrating generative AI to teaching, learning and assessment we should flip our approach and start with creation. Suddenly, AI becomes a partner for learning, a co-creator that might accelerate insight. For example, we could encourage students to use ChatGPT to create a business model for a new entrepreneurial venture. The business model is evaluated and analysed using a range of tools explored throughout the module. Students apply the outcomes of the evaluation and analysis and consider, for example, the application of strategies relating to sustainable business. Finally, in-person assessments such as presentations, verbal exams or reflective debates can be used to ascertain students’ understanding and remembering.

Remember: AI is just a tool, created by humans. What we put in is what we get out. As creators and disseminators of knowledge rooted in robust and ethical research, it is our responsibility to utilise digital tools in a way that makes the application of generative AI a benefit to our profession and society in the long run.

Christine Rivers is a professor at Surrey Business School, University of Surrey. Her research focuses on mindfulness in business education with particular emphasis on developing future leaders and responsible use of artificial intelligence to address organisational and societal challenges.

Anna Holland is associate professor and director of learning and teaching at Surrey Business School, University of Surrey. Her research focuses on generative AI in learning, teaching and student experience.

If you would like advice and insight from academics and university staff delivered direct to your inbox each week, sign up for the Campus newsletter.