The trouble with Bloom’s taxonomy in an age of AI

When using large language models to create learning tasks, educators should be careful with their prompts if the LLM relies on Bloom’s taxonomy as a supporting dataset. Luke Zaphir and Dale Hansen break down the issues

You may also like

Popular resources

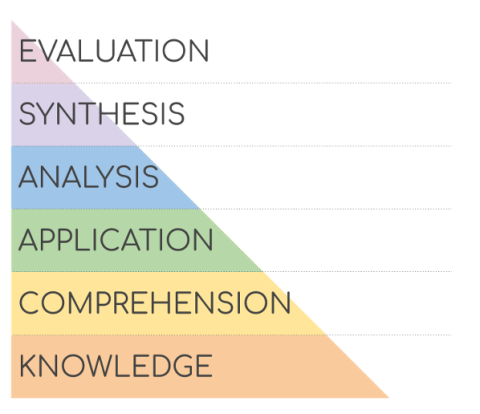

Educators have always faced the challenge of how to determine if their students are thinking. Since the 1950s, Benjamin Bloom’s taxonomy of educational objectives has been one of the most influential pieces of educational psychology (see Figure 1). However, in an age where teachers may be using AI as tools to assist them in task creation, we need to reconsider how we design teaching for thinking.

Large language models (LLMs) and AI tools such as ChatGPT and Gemini use Bloom’s taxonomy as one of the most significant influences on how critical thinking is defined. In this article, we explore the challenge of integrating AI into teaching, learning and assessment while Bloom’s taxonomy is a significant part of its training model.

What is Bloom’s taxonomy?

Bloom’s taxonomy, a six-category framework of educational goals, has been highly influential for educators for nearly 70 years because it creates a clear structure for learning, is highly versatile across contexts, and facilitates the creation of critical-thinking activities.

It’s versatile but is Bloom’s accurate?

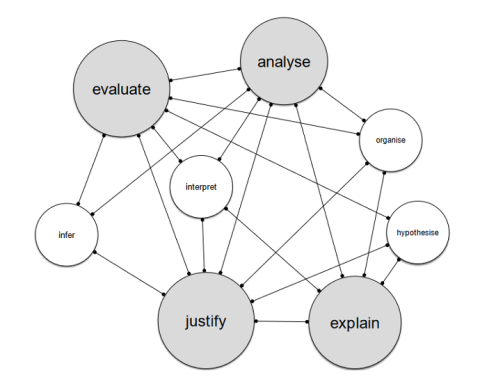

Criticisms of Bloom’s taxonomy have also been around for more than half a century, and many are well known. One of the major issues is that the taxonomy does not capture the complexity of cognitive skills. It can be seen as inaccurate as cognitive processes interrelate with each other rather than building on each other.

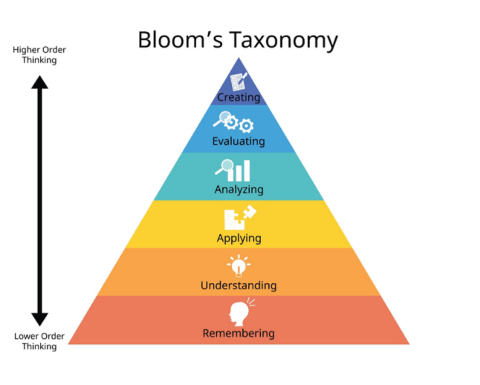

To illustrate, let’s look at an “understanding” task (see Figure 2): “Define life.”

This is a deceptively complex task. Not only would it require a standard understanding of respiration, response to stimuli and reproduction, but it also merits discussion of more spiritual or existential issues. Ecosystems and stars may fit a definition of life. Any definition will be incomplete and debatable. A student would demonstrate high levels of critical thinking and insight if discussing the foundations of our understanding rather than merely explaining our definitions.

The taxonomy has seen revisions over time (notably by David R. Krahwohl in 2002), but these still don’t fully capture the interrelated and interdependent nature of thinking processes. Alternatives such as cognitive web models (as proposed by Peter Ellerton and co-authors from the University of Queensland Critical Thinking Project in 2022) argue that cognitive skills often require a host of related skills and tasks (see Figure 3), and this may be more useful in designing for learning objectives.

Prompting AI for assistance with teaching thinking

To illustrate, we prompted ChatGPT to create questions that demand increasing amounts of critical thinking on the following task: “Describe the structure of a cell.” It responded with:

- Basic understanding: Describe the basic structure of a cell, including its main components.

- Comparing cell types: Compare and contrast the structures of prokaryotic and eukaryotic cells, highlighting their key differences and similarities.

- Specialised cell structures: Explain how the structure of a plant cell differs from that of an animal cell, emphasising the unique organelles found in each type.

- Cellular functions: How do the various organelles within a eukaryotic cell contribute to its overall function and survival?

- Cellular adaptations: Analyse how the structure of cells in different organisms reflects their specialised functions and adaptations to their environments. Provide examples.

- Theoretical cell modifications: If you were to design a hypothetical cell with a specific function, how would you modify its structure to optimise its performance? Explain your reasoning.

When asked how it increased the critical thinking, ChatGPT responded that it incorporated elements of Bloom’s taxonomy in designing to promote higher-order thinking, then demonstrated how each question aligned to the taxonomy. Generative AI still “believes” that cognitive skills are hierarchical.

This is a problem for educators in two significant ways.

First, the questions are wildly inconsistent in their level of difficulty. The sixth question (creating) is so challenging that it’s likely only a biology expert would be able to answer it. This might be addressed through using better prompting practices, but this leads to the second problem.

When asking AI for greater levels of critical thinking, it struggles to discern among levels. “Comparing and contrasting” is an “analysis” skill, yet ChatGPT has treated this as an “understanding” skill. We’d likely use both, too, further demonstrating that these skills are interrelated rather than hierarchical.

Create AI prompts that widen perspective or engage with ambiguity

The obvious conclusion is that educators need to be careful with their prompting when it comes to using AI as a design tool. More than that, we need to recognise that the AI may create hierarchies (which are potentially inaccurate to boot) whenever prompted for suggestions or during brainstorming.

Educators will need to consider the specific cognitive skill they want to teach or assess (when analysing, do they really mean classifying?), along with the qualities of that thinking (do they care about having coherent classifications or broad classifications, for example).

Consider prompting AI for activities that require broader perspectives or engage with the ambiguities of the issue. “Create an activity that explores the many ways life can be defined” is going to produce greater variety and complexity than asking it to “add critical thinking or difficulty to this question”. Given that contemporary critical-thinking pedagogy recognises the interrelatedness of thinking skills as well as the different qualities of thinking, it’s essential that we exercise care when designing critical-thinking tasks.

Luke Zaphir and Dale Hansen are learning designers in the Institute of Teaching and Learning Innovation at the University of Queensland.

If you would like advice and insight from academics and university staff delivered direct to your inbox each week, sign up for the Campus newsletter.

Additional Links

For more on qualities of thinking, see “The Values of Inquiry: explanations and supporting questions” by Peter Ellerton of the UQ Critical Thinking Project.

.jpg?itok=0cgNENGx)