In an era of online anti-vaccination theories, climate change scepticism, and hostility from some quarters towards universities, academics are nervously eyeing public trust in science like never before.

But do researchers actually trust each other? The answer, according to data from a survey of more than 3,000 scholars across the world, is “sometimes”: a sizeable minority placed limited weight on their colleagues’ work.

"There’s always someone trying to pull the wool over your eyes,” according to one anonymous response to the survey. “Within your own field this can be easier to detect but it’s less easy to determine when scouting subjects that you are less familiar with,” they warned.

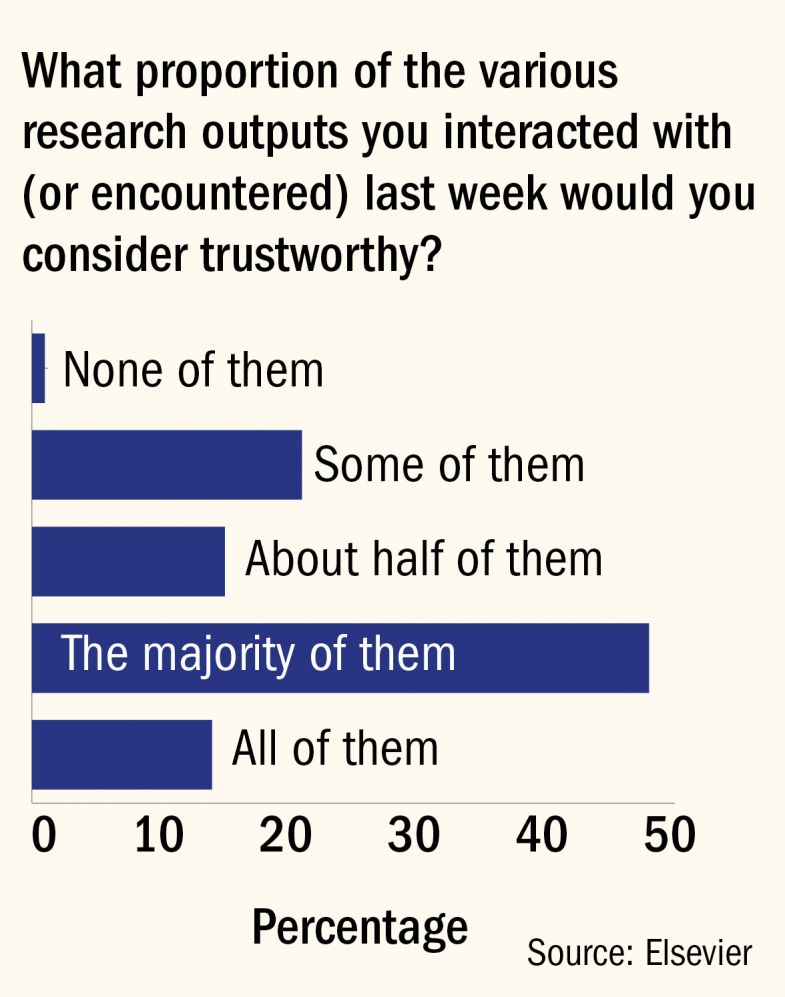

A full 37 per cent of respondents said that during the past week, “about half” or less than half of the “research outputs” they “interacted with” or “encountered” were actually “trustworthy”. Only 14 per cent said that they trusted all the work that they had come across.

Level of trust in each other’s work

With scholars “overwhelmed” by the volume of research that they need to keep abreast of, it is “not a great surprise that researchers are doubting some of the material that’s out there”, said Adrian Mulligan, director of customer insights at the publisher Elsevier, which carried out the survey.

The results come with some caveats: “research outputs” can refer not just to articles in journals, but also to things like academic blogs, datasets, non-peer reviewed papers in preprint repositories, or, in some cases, news articles about findings, Mr Mulligan stressed.

Academics inclined to distrust may have been more likely to have taken the survey. And Elsevier has an interest in presenting academics as being flooded by potentially dubious work, as the publisher markets itself as being able to help scholars navigate this stream of information.

But the findings may still help illuminate an arguably poorly evidenced part of the scientific process. While statistics on public trust in science are plentiful, Mr Mulligan said that he was not aware of any other research on whether scholars trust each other.

“I was surprised by these results,” said Catherine Heymans, an astrophysicist based at the University of Edinburgh and the Ruhr University Bochum, Germany. “That’s not what you find in my field,” where there was a higher level of trust, she argued.

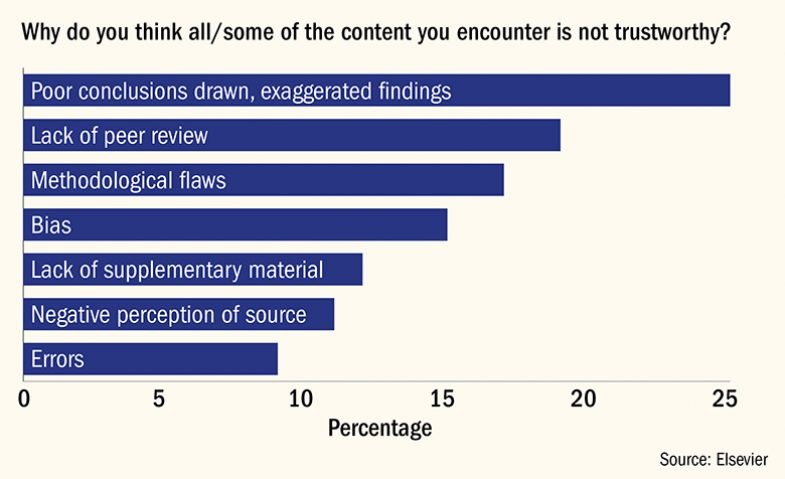

Reasons for doubting the reliability of research

The survey results, drawn from a random selection of authors on Elsevier’s Scopus database, asked academics what raised red flags over research trustworthiness.

“Poor conclusions drawn” or “exaggerated findings” were two of the things likely to evoke suspicion. The biggest warning sign is when authors “oversell” their results, Faith Osier, a malaria researcher at Heidelberg University Hospital and a speaker on public trust in science, told Times Higher Education. “As authors, we are all guilty of this to varying degrees at some point in our research careers,” she said.

“Lack of information or detail provided” was also a key concern for survey respondents. Checking supplementary material or data was the most common way in which academics said they compensated for a lack of trustworthiness.

This was less of a problem in physics, and particularly in astrophysics, because the majority of data were public – mandated by policies from major facilities, explained Professor Heymans. It was increasingly common for astrophysicists to publish their analysis software too, meaning that results are “becoming 100 per cent reproducible”, she said.

The Elsevier results indicate that peer review – or a lack of it – is the second most important factor in determining trustworthiness.

Peer review is “absolutely crucial”, said Professor Heymans, because, particularly in astrophysics, it usually involves checking the reproducibility of findings. But given the choice between peer review and fully open-access data and software, she would choose the latter. “I don’t need the peer review if that’s there,” she said.

Ioana Cristea, an associate professor at Babeş-Bolyai University in Romania who focuses on research transparency and rigour, said, all things being equal, she would trust a peer reviewed article over one that was not. But, “you always have this question mark: how did it [a paper] land here [in a particular journal]?” she said.

Some peer reviewers offered only very general comments on papers, she said, while she suspected some journals in her field of seeking out “controversial” and “citable” papers. Journal titles were sometimes used as a “proxy” for trustworthiness, even if using such “shortcuts” was not ideal. “Everyone cannot read the entire literature,” she said.

“Any scientific paper can be wrong – no matter where it is published, no matter who wrote it or how technically sound the method is, or how well supported the arguments. Even peer review at times doesn’t solve the problem,” said Abrizah Abdullah, a professor at the University of Malaysia who has investigated scientific trust in Malaysia and China. “An easy way to detect that a paper has a problem is when you have the feeling that the authors didn’t actually read some of the papers they are citing,” he said.

About half of surveyed academics said that they would “seek corroboration from other trusted sources” when working out whether to trust a paper; Professor Cristea said that she had cultivated a trusted list of academics on Twitter who flagged up good and bad research.

“I follow some people...and trust them, and if they say a paper is crap I believe them,” said Brigitte Nerlich, emeritus professor of science, language, and society at the University of Nottingham.

“Given my multidisciplinary work, I tend to only read papers that have been sort of pre-checked by others – via blogs or Twitter,” she added. “That means I use experts in various fields – that I trust, and of course that’s subjective – as gatekeepers. I especially trust those who speak out against hype.” An “incomprehensible” abstract, or a lack of information about method or findings were also red flags, she said.

“I don’t share the common view that many papers contain mistakes,” said Merlin Crossley, a professor of molecular biology at UNSW Sydney who has written about the peer review process. “I trust nearly all the papers I read – certainly greater than 90 per cent, but while I trust the data I may have reservations about the scope of the conclusions.

“We’re trained to be sceptical of everything,” he said. And yet, he added, researchers are “also trained to present our work in a clear way and to highlight the positive so I’m always cautious about whether the interpretations and conclusions are exaggerated”.

About one in 10 survey respondents also flagged “errors”, be it in grammar, citations, “inflated statistical power”, code or calculations as a reason for doubt. One respondent said that they had discovered “poor statistical elements that are obvious to an educated reader but clearly were not obvious to reviewers”.

david.matthews@timeshighereducation.com

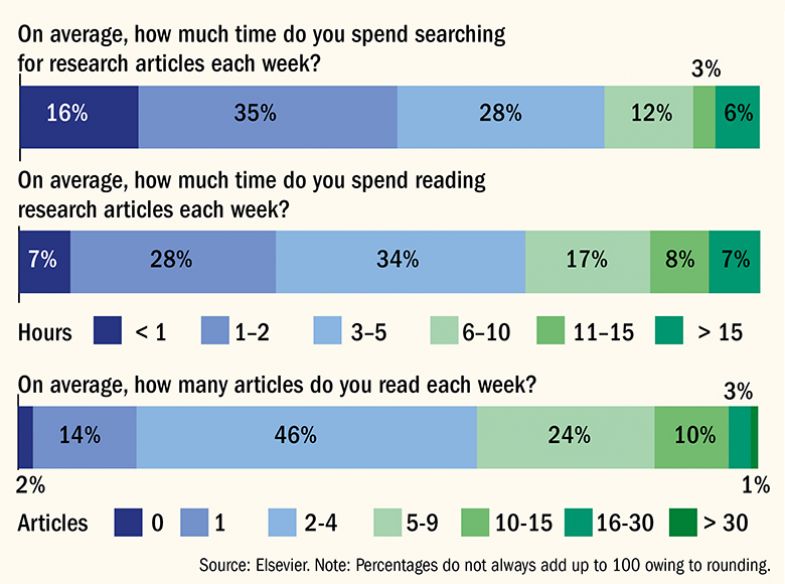

Academics spending more time searching for articles

Academics’ concerns about the trustworthiness of research may be forcing them to spend more time searching for articles to read.

A survey of 1,450 researchers conducted by Elsevier – and released alongside its work on trust – found that respondents spent nearly as much time looking for scholarship as reading it.

Respondents, who were randomly selected from the publisher’s Scopus database, spent an average of four hours and eight minutes each week searching for research articles. They spent five hours and 18 minutes reading them, on average.

Time spent searching for and reading articles

The mean number of articles read each week by respondents was 5.4.

Elsevier said that tracking the results over time suggested a worrying trend: comparing 2019 with 2011, researchers were reading 10 per cent fewer articles, but were spending 11 per cent more time looking for them.

Respondents said that an average of 51 per cent of articles that they read were useful, compared with 56 per cent in 2013.

“The issue of quality and reliability is so fundamental for researchers and can have such a knock-on effect on their ability to further their research,” said Tracey Brown, chief executive of Sense about Science, which partnered with Elsevier on the project. “With the speed in growth in data volumes and sources, this debate has never been more important.”

Chris Havergal

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber? Login