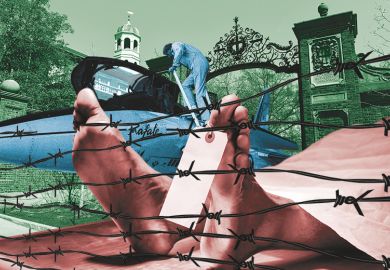

Academia is a sector under siege as its expertise is called into doubt regularly by Donald Trump and political leaders around the world. While it’s true that alternative facts may be cooked up on social media, in the tabloid press, in mercenary contracts to manipulate elections, they’re also brewed in the lab, where a publish or perish dictum drives careers. Fraudulent research does nothing to help academia’s standing with the public, but more critically, it threatens science.

Speak to academic researchers and they’re disinclined to over-egg their findings. But they operate in a world where they’d be crazy not to. Negative results, for example, don’t interest journal editors. But, at the whiff of a chance that some novel idea could actually be true, suddenly they’re all ears.

Science would actually be served if null results were published. Scores of fellow researchers could avoid dead ends and invest their time and resources more profitably. But that’s not how it works in academic publishing, where sensation rules. The language might be different to the tabloids, but the sensibility is the same.

The system encourages tweaking of research results to get them over the line, to nudge them into the realm of statistical significance. And it arms researchers with a perfectly tailored weapon: the p-value, that century-old test of statistical significance that – to a journalist’s eyes – looks anything but. Whoever coined the phrase “lies, damned lies and statistics” could have had p-values in mind.

What does this mean in practice? It means that academics routinely publish findings that cannot be replicated. The so-called replication crisis has plagued psychology and biomedicine. It is partly responsible for the billions of dollars squandered on fruitless drug trials – a cost that we all end up wearing every time we visit the chemist.

Now an Australian-led study has found that this statistical gamesmanship is not limited to psychology and biomed. It’s alive and well in evolution and ecology.

That’s no surprise, really. Pressure to publish is not discipline-specific. It permeates research.

Does it matter if researchers falsify some theory about how the newt evolved, or whether prairie voles mate for life? If they get it wrong, it’s not going to ramp up the price of antibiotics, after all.

The University of Melbourne ecologist Hannah Fraser, who led the Australian study looking at fraud in evolution and ecology research, says that it matters because it gives policymakers a “neat scientific excuse” not to do anything about, say, excessive land clearing. Or climate change.

While some researchers build careers on debased science, others build careers combating it, drifting away from the disciplines that they’ve devoted years to because they figure that they can make a bigger contribution by putting derailed science back on the right track.

One of them is John Ioannidis, a Harvard-trained medical doctor who sidelined into statistics and is now a professor of both disciplines at Stanford University. He once demonstrated empirically that research findings were more likely to be false than true.

Research integrity campaigners say that the statistical tweaking of research results is widespread, and a much bigger problem than the brazen scientific fraud that captures the headlines, such as Andrew Wakefield’s discredited research linking vaccination with autism.

Or thalidomide whistleblower William McBride’s false findings that the morning sickness drug Debendox caused birth deformities. Or Japanese biologist Haruko Obokata’s discovery of a deceptively simple way of manufacturing stem cells. Or Swedish marine biologist Oona Lönnstedt’s claims that microplastics were driving European perch towards extinction.

But the reality is, we don’t know how many researchers graduate from statistical fine-tuning to full-blown fabrication. “It’s really hard to get data on fraud,” Fraser acknowledges. “People aren’t going to answer honestly, even in an anonymous survey.

“And there’s institutional pressure not to report people who are behaving fraudulently. I know a couple of places where people are aware of fraud and don’t report it – or have reported it, and nothing’s happened.”

As a journalist, I’m pursuing a couple of instances of alleged research fraud where investigations are proceeding at glacial pace. Universities and journals seize any opportunity to sweep it under the carpet.

Fraser says that it’s hard to believe that research fraud would be as prevalent as the “questionable” research practices that she investigated, with more than 50 per cent of researchers confessing to a bit of judicious tweaking. “The point of science is to understand truth,” she points out.

Admittedly, shysters could probably find richer pickings elsewhere. Banking comes to mind.

But if the best defence that it can manage is that it’s better than banking, science probably deserves its sceptics.

John Ross is Times Higher Education’s Asia-Pacific editor. He is based in Melbourne.

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber? Login