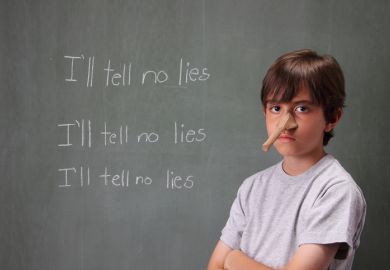

The results of course evaluation surveys can be undermined by students’ inattentiveness while filling them in, a study suggests.

Researchers at a US university conducted an experiment in which they inserted patently false statements into end-of-module questionnaires and found that surprisingly high numbers of undergraduates answered that they were true.

More than two-thirds of students, 69 per cent, endorsed the statement that “the instructor took roll at the beginning, middle and end of every class meeting”.

Nearly a quarter, 24 per cent, agreed that “the instructor was late or absent for all class meetings”, while 28 per cent said that it was true that “the instructor never even attempted to answer any student questions related to the course”.

The experiment, detailed in Assessment & Evaluation in Higher Education, involved students on six psychology courses at Lander University, South Carolina.

In a follow-up survey, 113 students who were not involved in the experiment were asked how seriously they took course evaluations.

On an 11-point scale, the average response was 6.8, but only one in five students said that they took evaluations seriously all the time. More than three-quarters of respondents (76 per cent) said that they sometimes took the process seriously, but at other times they “just bubbled in answers” to finish the survey quickly.

Respondents were doubtful that the results of evaluations would be used to make decisions about promotion and retention, or to improve courses and teaching standards.

The five authors – Jonathan Bassett, Amanda Cleveland, Deborah Acorn, Marie Nix and Timothy Snyder – say that their findings “should not be interpreted as evidence that all student evaluations at all institutions are invalid due to inattentive responding”.

But the research builds on previous papers that have found that students are more likely to rate male lecturers highly, and have found a positive relationship between course grades and evaluation scores.

“The results of the present study should be taken as initial evidence that inattentive responding can potentially undermine the usefulness of student evaluations of teaching in some cases,” the paper concludes. “The challenge for future research will be to determine just how widespread rates of inattentive responding are in student evaluations of teaching at the university level.”

The researchers recommend that universities limit the number and length of surveys that students are required to complete, and demonstrate how the results are used to deliver improvements, in order to boost student attentiveness.