Academics and universities that “fetishise” publication in certain journals are the root cause of poor incentives in science – not the design of assessments such as the UK’s research excellence framework, an event on reproducibility has been told.

The use of measures such as journal impact factors to make decisions on REF submission – even when the framework explicitly stated that it would not use them to assess articles – further demonstrated that they were still “part of a culture that exists in universities”.

Marcus Munafò, professor of biological psychology at the University of Bristol and chair of the UK Reproducibility Network, told an event in London that much of research culture “remained rooted…in the 19th century”, with ways of working “that are not necessarily fit for purpose any more”.

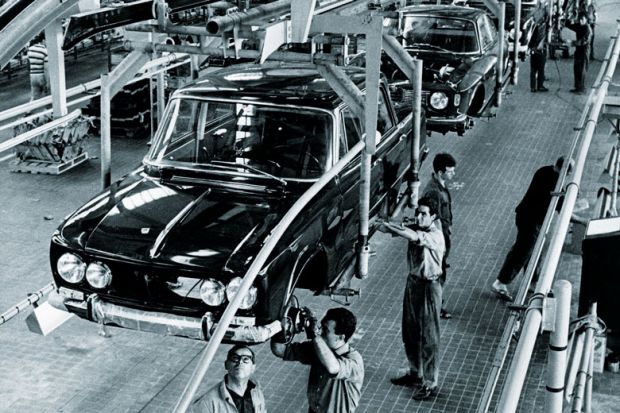

He compared it to the 1970s car industry, in which poor-quality vehicles were built without the constituent parts being checked along the way.

“We produce many of our scientific papers without necessarily having a concern about whether they are right”, operating in the belief “that someone will fix them later as somehow science will self-correct”, said Professor Munafò.

But asked whether journals seen as high impact, such as Nature, were a major part of the problem, he said “the problem arises…when we essentially fetishise publication in that kind of journal. It is the institutions in particular and the line managers that do that, not the journal itself.”

This fixation has become so ingrained in the research culture that even when funders and assessments such as the REF state that they do not use journal impact factors – a widely criticised metric that looks at the average citations received by articles in a particular journal – “we don’t believe them; it is still part of a culture that exists in universities when it comes to REF preparation and REF submission”, Professor Munafò said.

Earlier, the event – hosted by the British Neuroscience Association at the Sainsbury Wellcome Centre – heard from the head of REF policy at Research England, Helena Mills, who said that although the organisation had repeated “until we’re blue in the face” that journal impact factors were not used in assessments, people “can still turn around and say, ‘You do though, don’t you?’”

However, asked if the REF could do more to insist on open research practices such as data sharing, she accepted that “there is probably a lot more that [future exercises] could do” on reproducibility and open science.

Professor Munafò said that although he hoped the UKRN would help to influence the design of the next REF, there were “levers” in the current exercise that could be used to improve research practices. These included statements made by universities on their research environment, where they could declare what they were doing to support open science.